How to generate subtitles with Whisper

- Introduction

- Upload files to your Google drive

- Using colab, follow the steps

- Parameters to transcribe with Whisper

- A friendly code to change parameters

- Translate with...

- Tips

Introduction

You're dreaming to make your own subtitles of videos, you have the programs to edit srt file but

you're not good to learn a new language. OpenAI Whisper is what you need, it's accurate with

some languages, and it's free !

Now let's start on how you can do it !

Upload files to your Google drive

First of all, login to your google account and go to your google drive.

Drag and drop your files or right click on your Google drive. (I choose to upload only the audio of

the video, it will take less time).

Now two choices:

- Go to the original page : https://colab.research.google.com/drive/1WLYoBvA3YNKQ0X2lC9udUOmjK7rZgAwr?usp=sharing

- Or you can do the same with my modified page of the original, with an interactive interface to change the parameters more easily, go to : A friendly Code to change parameters

Then, follow the instructions of the page, it's a tutorial for using whisper but I will add some useful informations next, so only do the introduction before « Step One ». Open your colab page, you can do it with Google Chrome or Firefox or else, choose your favorite web browser.

Using colab, follow the steps

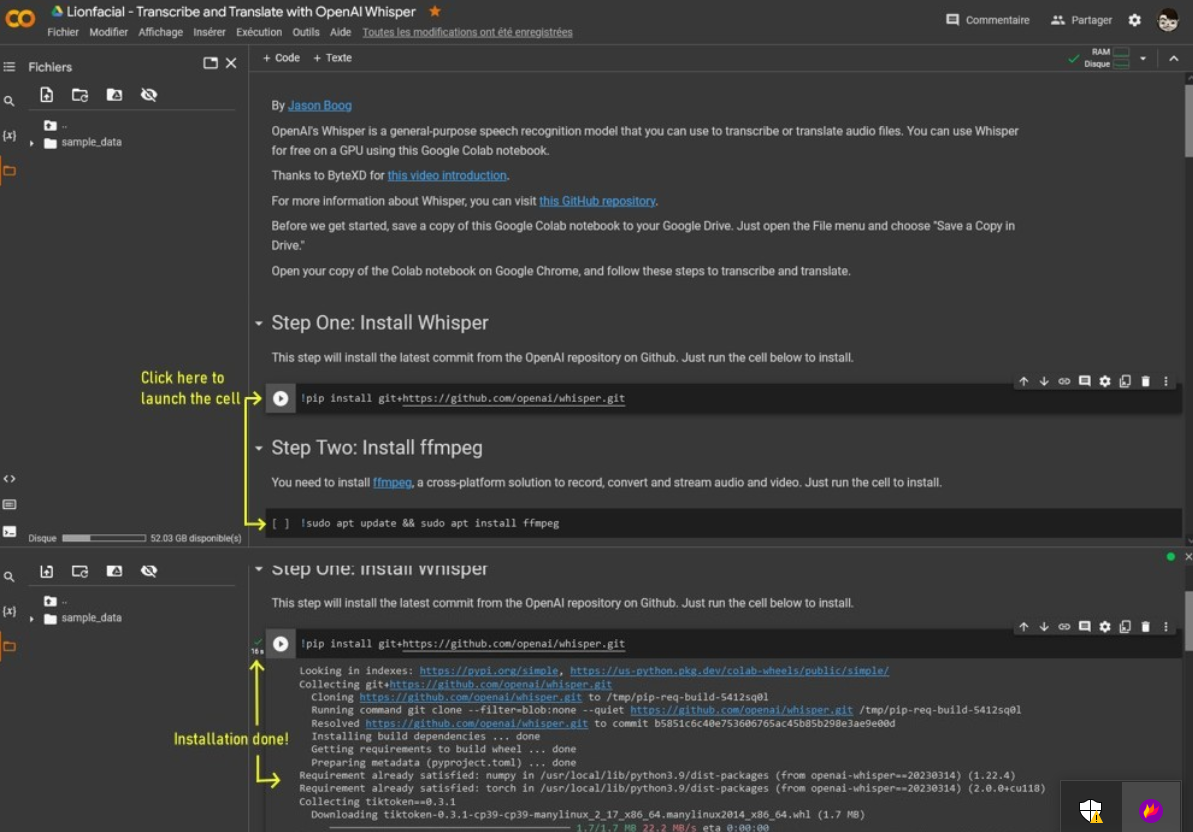

To begin use whisper follow the instructions of your page (step one and two) :

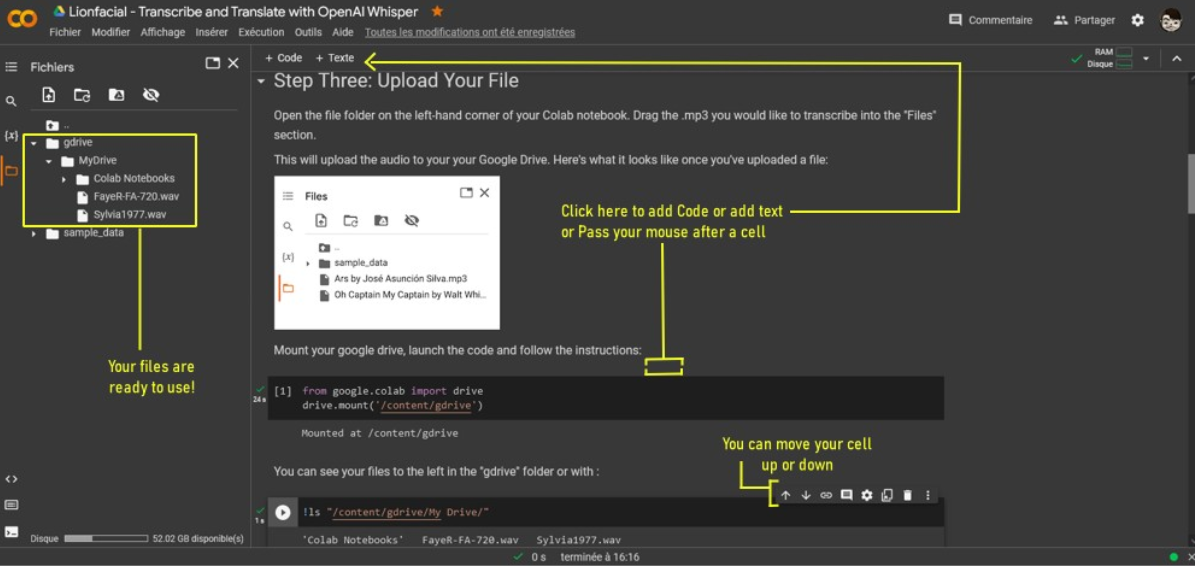

Now you need to mount your google drive.

First add the code (at step Three) by clicking [+Code] on the upper page or after a cell and enter :

Now you need to mount your google drive.

First add the code (at step Three) by clicking [+Code] on the upper page or after a cell and enter :

from google.colab import drive

drive.mount('/content/gdrive')

You can see a new folder named « gdrive » to the left (it take a little time), your files are in. (You can move the cell with their arrows buttons)

You can see a new folder named « gdrive » to the left (it take a little time), your files are in. (You can move the cell with their arrows buttons)

Parameters to transcribe with Whisper

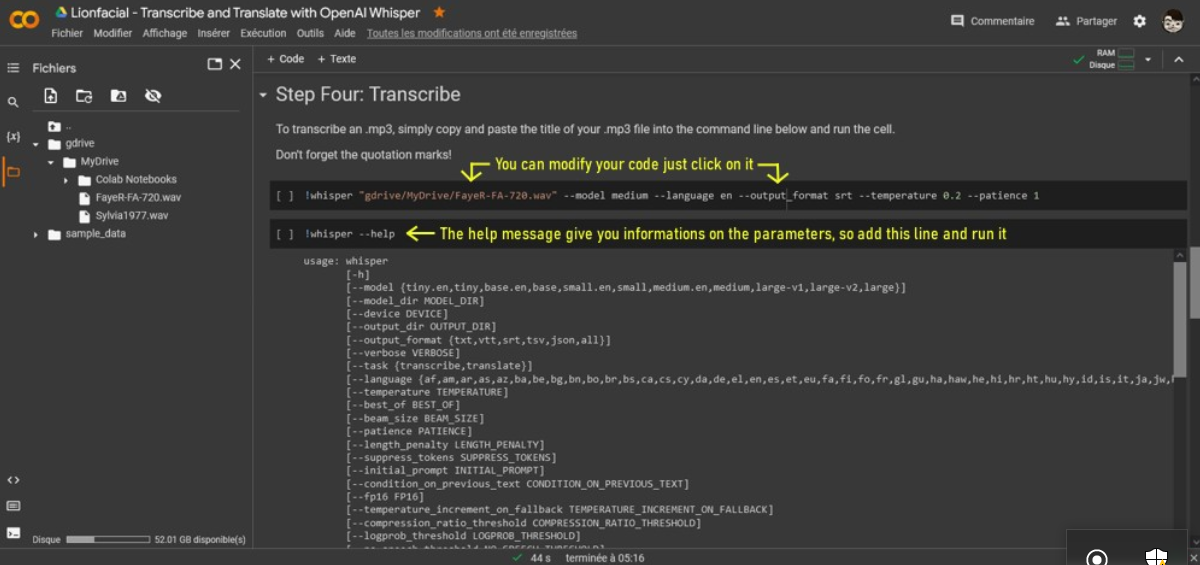

You can modify all the cells of your page, just click on it or the button « Modify » of the cell. So change the command line of the step Four, put the path of your file and add parameters if you want. To see what sort of parameters Whisper have, add the code :

!whisper --help

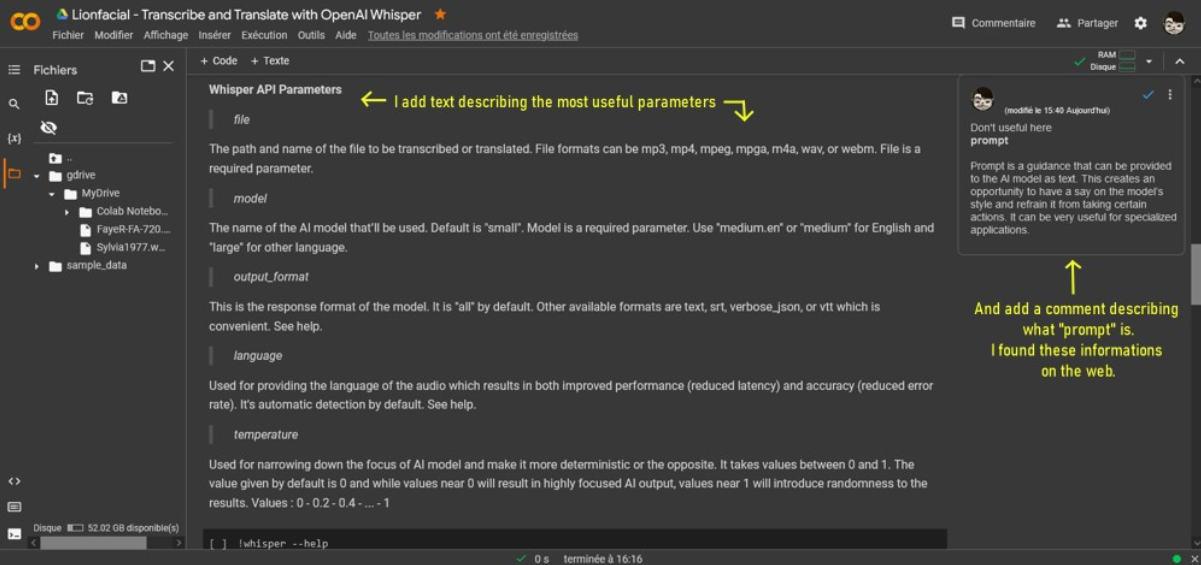

And now a description of the most useful parameters :

And now a description of the most useful parameters :

- The file formats can be mp3, mp4, mpeg, mpga, m4a, wav, or webm. Write with quotes.

- --model : Follow by the name of the AI model. By default is « small », so change by medium.en or medium for English and large for an other language.

- --output_format : This is the format of the text transcription it create. By default is all, so change it to srt. See help message for other format.

- --language : Used for providing the language of the audio (improved performance and accuracy). By default it's automatic detection. See help message for the country code.

- --temperature : Used for narrowing down the focus of AI model and make it more deterministic or the opposite. By default is 0 and the max is 1. Values near 0 will result in highly focused AI output but can produce some errors like repeated or missed text, near 1 will introduce randomness to the results and you lose some accuracy.

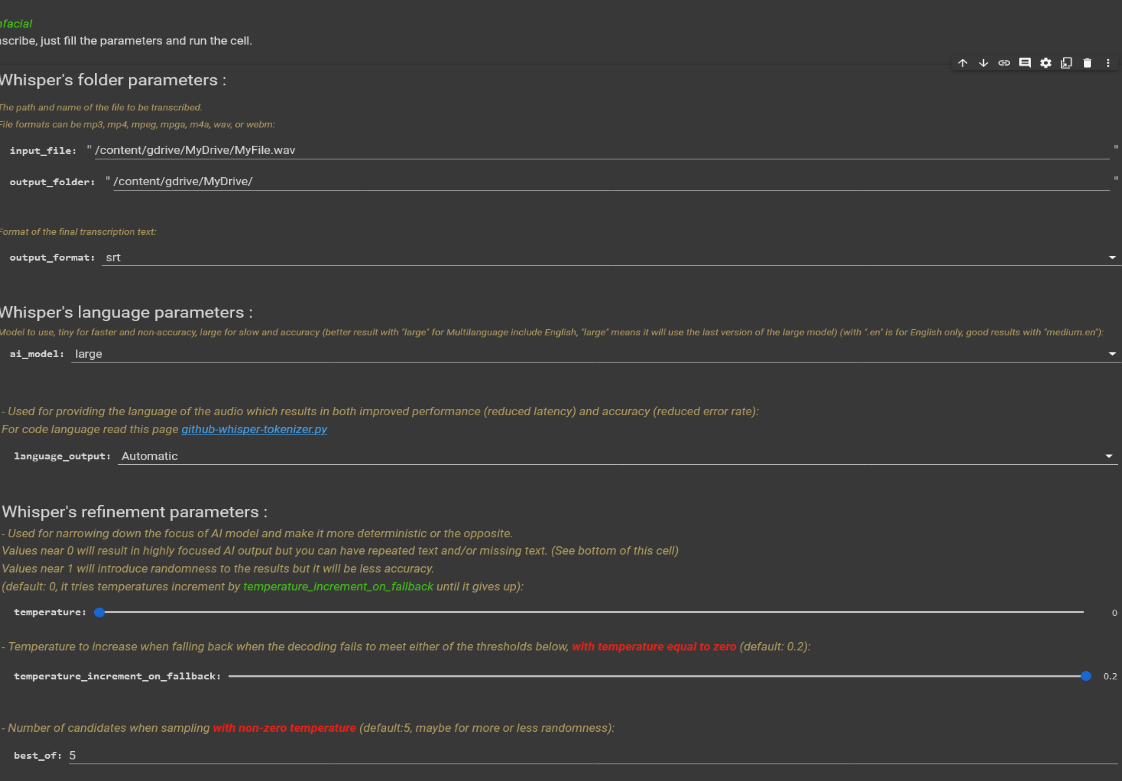

A friendly code to change parameters

Too difficult for you to change the line every time? don't worry, I create an interface to simplify all the work. And i give you the link of my modified Google Colab page : https://colab.research.google.com/drive/1ZcFAgY2zudea415VPtp3H8jtRY1zIhsQ?usp=drive_link

And this is my google colab page of insanely-fast-whisper (outdated): https://colab.research.google.com/drive/1wj6f0pljYz_Srst_HfJlkWuF_6Ma7Zck?usp=drive_link

Next, follow the instructions of the page and...

Enjoy the interface now :

Translate with...

Step 5 of the page is useless without a google colab pro, so i never try it to translate a file.

Just download the text file Whisper have created at the left of the page. It's a srt file if you choose

only this output format.

Now translate with your favorite translator : Google translate, DeepL translator, Reverso, yourself because you're very smart or masochist, or a dictionary because it's still exist and you are really a masochist. Or anything else...

All of this takes time, you can spend 2 hours for 5 to 10 minutes of video/audio to make a good subtitle file.

Tips

- Sometimes for transcription, you can have different results with a second run of the command line. But warning, it overwrite the first file.

- If there are missing parts in the transcription of a dialogue, re-try with only this audio part record. Whisper can detect it or not, in this case change parameters like --temperature.

- For good results, I recommend these settings: temperature=0, temperature_increment_on_fallback=0.2, beam_size=7, patience=2.0

- I recommend making multiple transcriptions of the same file with different settings, make one with "turbo", another with "large-v2" (slow) and another one with "insanely fast whisper". This way you have multiple references if one of them fails in a subtitle line.

- Becareful with google colab, it's using a virtual machine and you can be disconnected if you don't use it after a little period. You will loose all the files created, so download them quickly or save them on your Google drive.

- Be smart, Whisper is not a magical tool that works perfectly. You need to check if nothing missing or anything else before sharing the files. You can have a lot repeated word, bad timeline, dialog that doesn't exist, dialogs missing, etc.

Last update: 2025-01-21